Author: sam

I used Amazon Alexa on the Focals, asked for a cafe nearby and requested directions.

It’s kind of like a smartwatch on your face.

Tech:

_ Optics: Laser

_ 15 degree viewing area

_ 300 x 300px

_ Monocular

i/o

_ Microphone

_ Voice to Text with Amazon Alex

_ Speaker

_ No Camera

Experience:

The Loop (the ring) – an input device like a joystick on a ring.

Customer service – impeccable.

You get you face scanned.

”Hard to see in daylight

The Ring input device is water resistant.

Mapping Service is Mapbox

Showed “lost internet connection”

[Issues with mapping – does not provide a very accurate position of your location.]

Needed to create a different number to receive text notifications

Text messages are not sent to the Focals, they are sent to North’s server and then North’s server sends a message to the Focals.

Can only order Uber X.

I grew to having enjoy notifications.

They feel like the future.”

You can check them out in Toronto and Brooklyn. The environment, customer service and even the response time from the Focals team on social media is really impressive.

Learn more here https://www.bynorth.com/focals

Visit in BK here

NORTH COBBLE HILL

178 Court St | Brooklyn, NY 11201

A visual positioning system of computer vision and machine learning.

Anything that’s been documented by Street View, like buildings but not trees because branches get cut and they lose leaves.

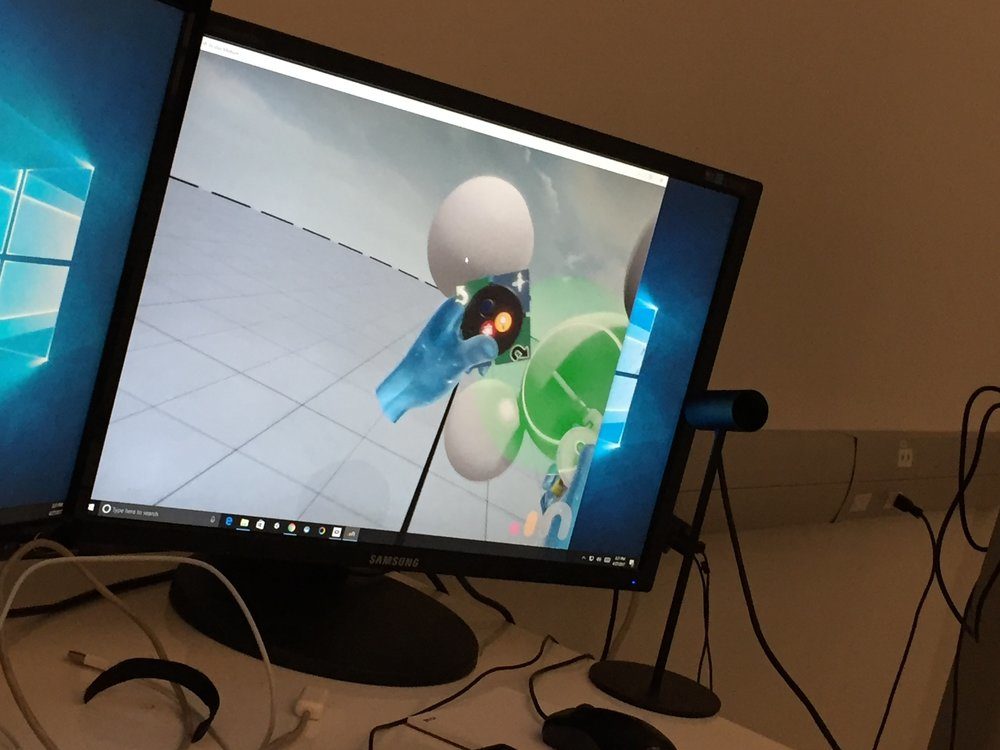

Oculus Medium – creating in VR

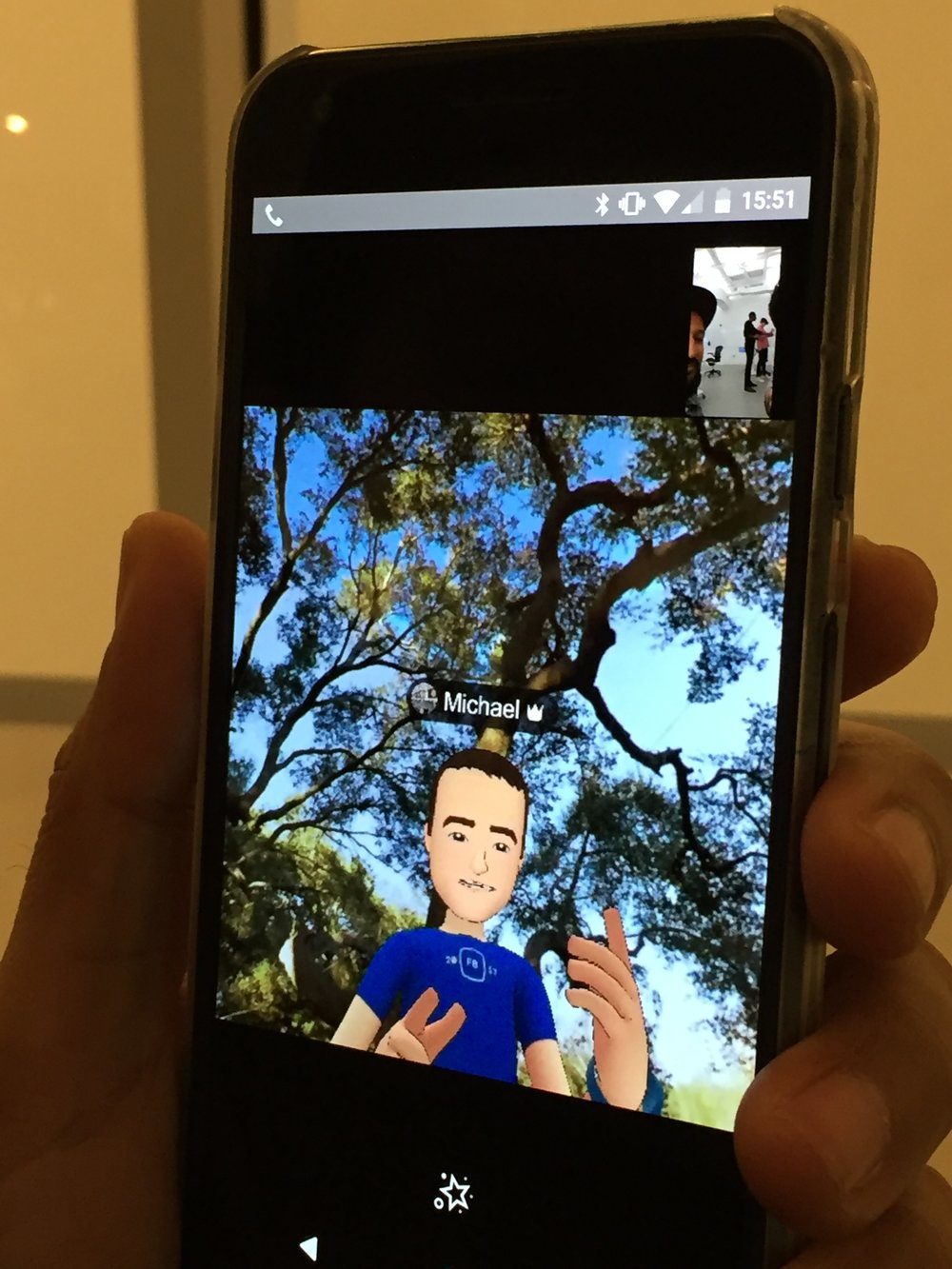

Facebook Spaces – social VR where you can video call your friend. Your friend can answer via Facebook Messenger so you can see a video of them, and they’ll view your auto-generated, customized, live avatar.

September 2018

Should you have have to walk across a map to access something in AR?

Does AR have to start hidden?

Does it have to be something you could find?

Does audio have to be defined by different locations?

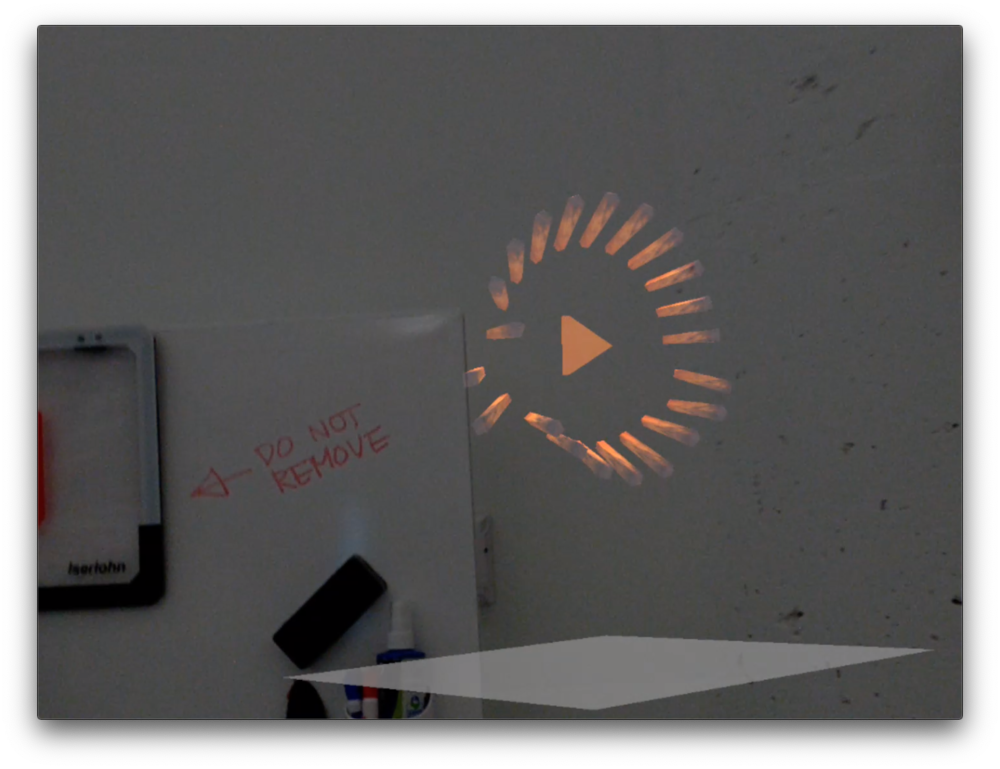

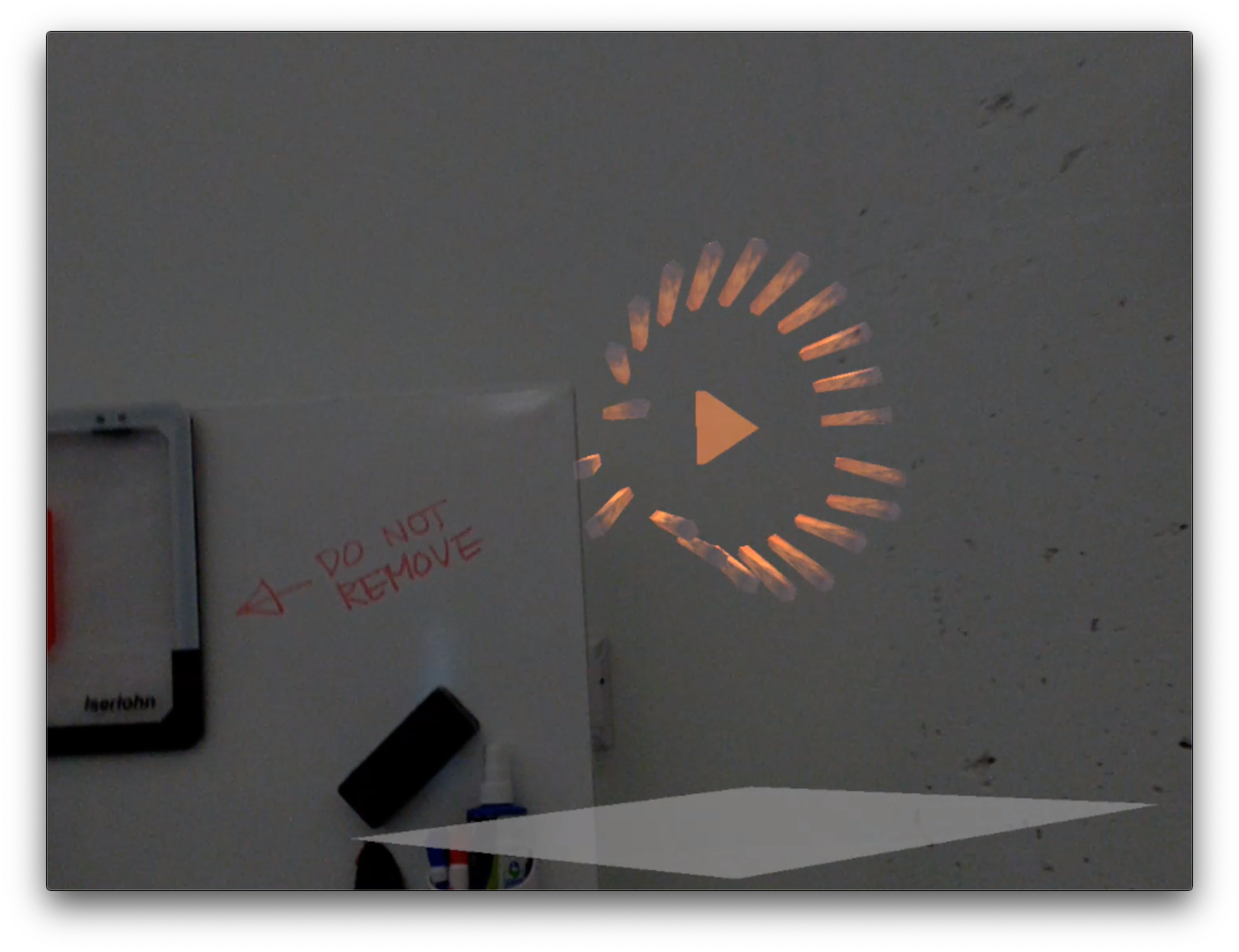

Spatial Audio next to a whiteboard

AR does not have to be something is hidden to then be found. AR should be contextually visible and accessible, not something that is a challenge to access. In fact, AR should be the interface to all of the technological advancements that we could not otherwise see.

AR should not be hidden. AR should be interactive. In this case, the sound is spatial and therefore interactive, because you can turn away from the sounds (3 degrees of freedom of rotation), and walk away from the sound (three more degrees of freedom).

If the concept is music, sound or audio across space then make the audio spatial.

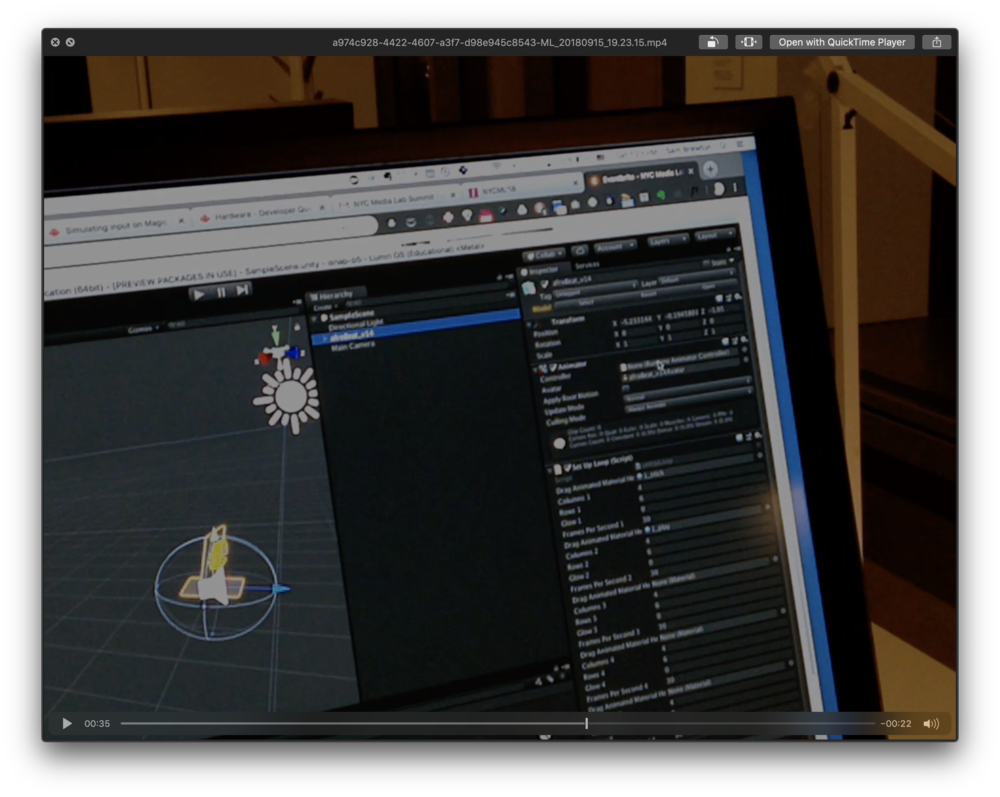

Here I took static cinema 4D animations that were intended to be linear, non-interactive Snap lenses, and I deployed it the magic leap.

While wearing the Magic Leap, I adjusted the radius of the spatial audio in Unity for the next build.

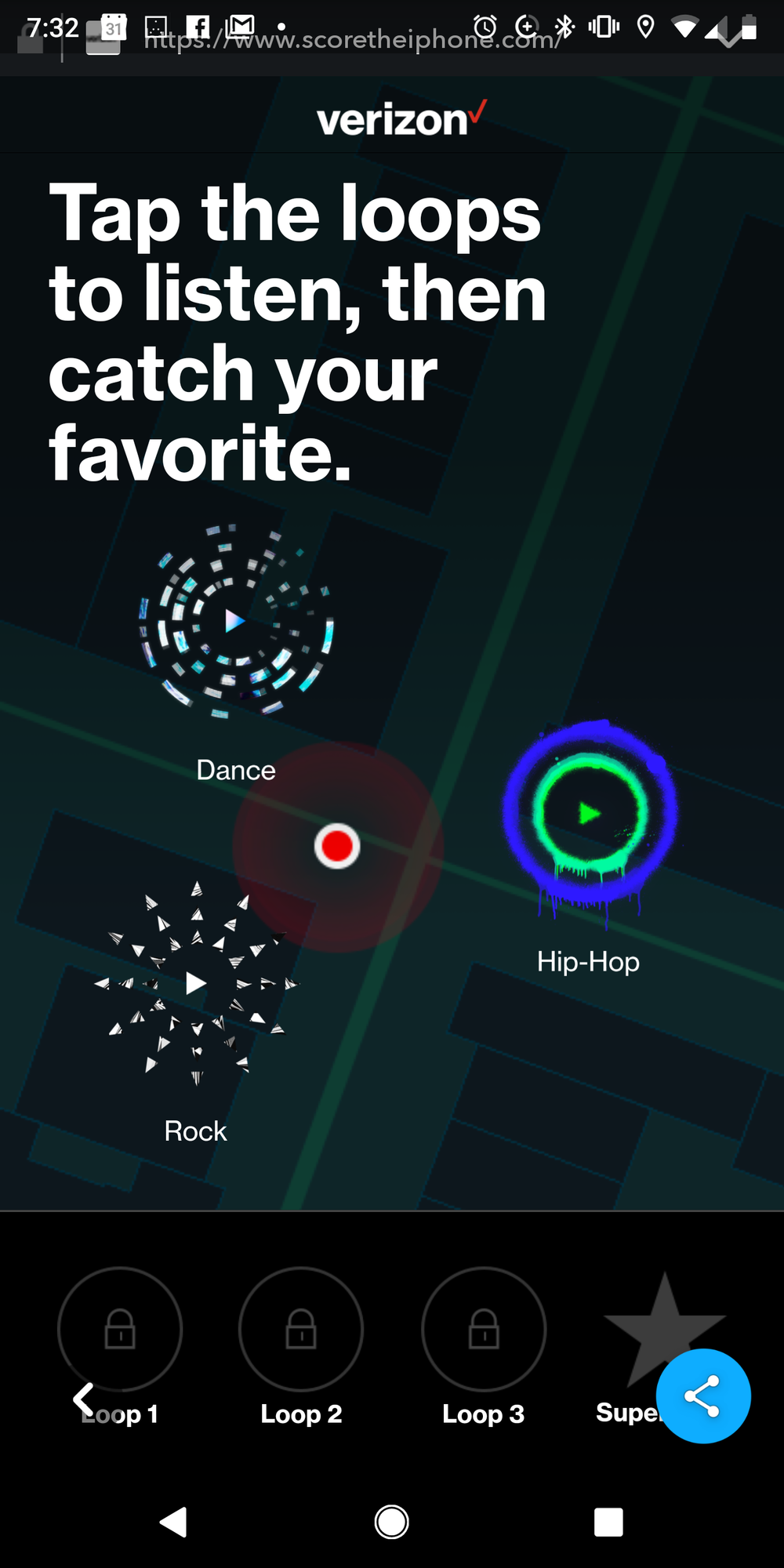

There were different beats or tunes and with these loops placed in different locations, you could walk around the music.

Play the video above with the sound on to hear the spatial audio.

Ironically in the application I built there was no way to reset the origin of the experience, and with Magic Leap’s persistence, even when I went to the center of the building’s floor plan to launch the music in the cafe, the spatial audio was half a block away.

2D Screenshots from Snapchat

September 2018

The horizontal tracking started at the height of a standing table (bar height at elbows) so the cube of 1.6 meters high was above my view.

The basic “Hello World” example from Magic Leap is “Hello Cube”

Fittingly at R/GA

Full video:

Notes from Savannah Niles’ talk at MIT Media Lab Reality Virtually Hackathon.

On localized and contextualized apps. The thought of bespoke apps naturally speaks to applications that are tailored to your exact locale based on local knowledge.

See the video below. The in the full post (below) have the Q&A note featured in the video.

LOCALIZED AND CONTEXTUAL APPS

Not one interface to rules them all

At MIT we don’t like to only think about Singleton solutions

But dealing with the locale, the space, and the context

As the medium emerges, something more powerful

To authentically integrate digital content into the real world

Stat innovations solutions that are site specific and relative to context

INPUT

[Started with a tight input pallet]

James- made the initial eye tracking graffiti app (former Graffiti Research Lab)

@jamespowderly

5 way controller – able to grow to 6 dof, but also the simplest version to be used for accessibility

Number of calories burned = similar to number of clicks

Gentle interfaces, highly response,

Redundant input is natural and gives users a choice

———————

Embrace collisions and physics when you can

Interactivity is reactivate – make something that can react with your environment

Graceful degradation

(Eg being derezzed)

Eg particle effects and portals – whenever something breaks in your environment reconstruction

——————

Classically taught recognition over recall – this Don Norman thought

That works on screens, but

The more minimal you render your interface

Morally mash it on an off with buttons, but reveal II with head pose gaze – gaze signals attention

————————

There has to be a button to go back to reality.

(There has to be a way to dismiss everything / remove everything from your view).

In testing QA said “Hey, there’s a bug. When you press this button you can’t see anything.”

————————

Use affordances of the physical world to interact with digital things

We’ll communicate with apps like we communicate with each other

From gesture to conversation, to gaze, to direct focus

E.g. the “put that there” demo that we’ve all been told about since the 70s

————————

Her full-time focus is for shared experiences

Spatiate – multi-person multi-platform drawing application

————

Future of input or interaction?

Her personally – thinks that interacting on physical surfaces – eg tapping on table

Being able to switch from fine to ballistic control – from nearby to further objects in the distance

Q&A

————

Skeuomorphism for touch devices was necessary

We’ll develop a model for using this device

We use headpose as an input – it is fundamental (even in normal h2h culture)

But people got tripped up on headpose

We’ll probably have buttons for a while

————

Everyone will try this as the technology is going viral

Consistency across apps and the ecosystem

————

Hire more diverse teams

unrepresented people with diverse points of view

representations

Educate ourselves

Read more and look at models like the wiki

Collective Authorship

Training in VR saves money and probably increases learning, retention and value creation over time at scale.

I believe AR in manufacturing immediately increases efficiency, output and value.

data-animation-override>

“Virtual reality training by companies like Microsoft is saving lives, millions of dollars, and ensuring the future of mixed reality”

AR ruled.

Despite the sad goodbye of some AR innovators at the end of 2018, there’s not a slowdown there is in fact one more SLAM HMD (glasses that understand the physical space around you).

SLAM (simultaneous localization and mapping) is in many devices. Robots, vacuum cleaners, drones, cars, glasses. Computer vision is so good that SLAM can run from an RGB camera over a mobile web browser (see 8thwall).

Two products that my work touched launched at CES this year.

-

The Apprentice platform – for telepresence and remote collaboration.

-

Lovot – a heart-warming robot from Japan.

(That doesn’t mean the next step is to control a SLAM robot via your glasses).